Rev

Bras

Anestesiol.

2019;69(2):168---176

REVISTA

BRASILEIRA

DE

ANESTESIOLOGIA

Publicação

Oficial

da

Sociedade

Brasileira

de

Anestesiologia

www.sba.com.br

SCIENTIFIC

ARTICLE

YouTube

as

an

informational

source

for

brachial

plexus

blocks:

evaluation

of

content

and

educational

value

Onur

Selvi

a

,∗

,

Serkan

Tulgar

a

,

Ozgur

Senturk

a

,

Deniz

I.

Topcu

b

,

Zeliha

Ozer

a

a

Maltepe

University,

Faculty

of

Medicine,

Department

of

Anesthesiology

and

Reanimation,

Istanbul,

Turkey

b

Gazi

University,

Institute

of

Health

Sciences,

Department

of

Medical

Biochemistry,

Ankara,

Turkey

Received

30

March

2018;

accepted

5

November

2018

Available

online

21

December

2018

KEYWORDS

YouTube;

Anesthesia;

Brachial

plexus

blocks

Abstract

Background

and

objectives: YouTube,

the

most

popular

video-sharing

website,

contains

a

signif-

icant

number

of

medical

videos

including

brachial

plexus

nerve

blocks.

Despite

the

widespread

use

of

this

platform

as

a

medical

information

source,

there

is

no

regulation

for

the

quality

or

content

of

the

videos.

The

goals

of

this

study

are

to

evaluate

the

content

of

material

on

YouTube

relevant

to

performance

of

brachial

plexus

nerve

blocks

and

its

quality

as

a

visual

digital

information

source.

Methods:

The

YouTube

search

was

performed

using

keywords

associated

with

brachial

plexus

nerve

blocks

and

the

final

86

videos

out

of

374

were

included

in

the

watch

list.

The

asses-

sors

scored

the

videos

separately

according

to

the

Questionnaires.

Questionnaire-1

(Q1)

was

prepared

according

to

the

ASRA

guidelines/Miller’s

Anesthesia

as

a

reference

text

book,

and

Questionnaire-2

(Q2)

was

formulated

using

a

modification

of

the

criteria

in

Evaluation

of

Video

Media

Guidelines.

Results:

72

ultrasound-guided

and

14

nerve-stimulator

guided

block

videos

were

evaluated.

In

Q1,

for

ultrasound-guided

videos,

the

least

scores

were

for

Q 1 --- 5

(1.38)

regarding

the

complications,

and

the

greatest

scores

were

for

Q1---13

(3.30)

regarding

the

sono-anatomic

image.

In

videos

with

nerve

stimulator,

the

lowest

and

the

highest

scores

were

given

for

Q 1 --- 7

(1.64)

regarding

the

equipment

and

Q1---12

(3.60)

regarding

the

explanation

of

muscle

twitches

respectively.

In

Q2,

65.3%

of

ultrasound-guided

and

42.8%

of

blocks

with

nerve-stimulator

had

worse

than

satisfactory

scores.

Conclusions:

The

majority

of

the

videos

examined

for

this

study

lack

the

comprehensive

approach

necessary

to

safely

guide

someone

seeking

information

about

brachial

plexus

nerve

blocks.

©

2018

Published

by

Elsevier

Editora

Ltda.

on

behalf

of

Sociedade

Brasileira

de

Anestesiologia.

This

is

an

open

access

article

under

the

CC

BY-NC-ND

license

(http://creativecommons.org/

licenses/by-nc-nd/4.0/

).

∗

Corresponding

author.

E-mail:

(O.

Selvi).

https://doi.org/10.1016/j.bjane.2018.12.005

0104-0014/©

2018

Published

by

Elsevier

Editora

Ltda.

on

behalf

of

Sociedade

Brasileira

de

Anestesiologia.

This

is

an

open

access

article

under

the

CC

BY-NC-ND

license

(http://creativecommons.org/licenses/by-nc-nd/4.0/).

Brachial

plexus

videos

in

YouTube

169

PALAVRAS-CHAVE

YouTube;

Anestesia;

Bloqueios

do

plexo

braquial

YouTube

como

fonte

informativa

sobre

bloqueios

do

plexo

braquial:

avaliac¸ão

de

conteúdo

e

valor

educativo

Resumo

Justificativa

e

objetivos:

O

YouTube,

site

de

compartilhamento

de

vídeos

mais

popular,

contém

um

número

significativo

de

vídeos

médicos,

incluindo

bloqueios

do

plexo

braquial.

Apesar

do

uso

generalizado

dessa

plataforma

como

fonte

de

informac¸ão

médica,

não

há

regulamentac¸ão

para

a

qualidade

ou

o

conteúdo

dos

vídeos.

O

objetivo

deste

estudo

é

avaliar

o

conteúdo

do

material

no

YouTube

relevante

para

o

desempenho

do

bloqueio

do

plexo

braquial

e

sua

qualidade

como

fonte

de

informac¸ão

visual

digital.

Métodos:

A

pesquisa

no

YouTube

foi

realizada

usando

palavras-chave

associadas

ao

bloqueio

do

plexo

braquial

e,

de

374

vídeos,

86

foram

incluídos

na

lista

de

observac¸ão.

Os

avaliadores

classificaram

os

vídeos

separadamente,

de

acordo

com

os

questionários.

O

questionário-1

(Q1)

foi

preparado

de

acordo

com

as

diretrizes

da

ASRA/Miller’s

Anesthesia

como

livro

de

referência

e

o

Questionário-2

(Q2)

foi

formulado

usando

uma

modificac¸ão

dos

critérios

em

Avaliac¸ão

de

Diretrizes

para

Mídia

de

Vídeo.

Resultados:

No

total,

72

vídeos

sobre

bloqueios

guiados

por

ultrassom

e

14

vídeos

sobre

bloqueios

com

estimulador

de

nervos

foram

avaliados.

No

Q1,

para

os

vídeos

apresen-

tando

bloqueios

guiados

por

ultrassom,

os

menores

escores

foram

para

Q 1 --- 5

(1,38)

em

relac¸ão

às

complicac¸ões

e

os

maiores

escores

foram

para

Q1---13

(3,30)

em

relac¸ão

à

imagem

sonoanatômica.

Nos

vídeos

que

apresentaram

bloqueios

com

estimulador

de

nervos,

os

menores

e

os

maiores

escores

foram

dados

para

Q 1 --- 7

(1,64)

em

relac¸ão

ao

equipamento

e

Q1---12

(3,60)

em

relac¸ão

à

explicac¸ão

das

contrac¸ões

musculares,

respectivamente.

No

Q2,

65,3%

dos

blo-

queios

guiados

por

ultrassom

e

42,8%

dos

bloqueios

com

estimulador

de

nervos

apresentaram

escores

abaixo

de

satisfatórios.

Conclusões:

A

maioria

dos

vídeos

examinados

para

este

estudo

carece

da

abordagem

abrangente

necessária

para

orientar

com

seguranc¸a

as

pessoas

que

buscam

informac¸ões

sobre

o

bloqueio

do

plexo

braquial.

©

2018

Publicado

por

Elsevier

Editora

Ltda.

em

nome

de

Sociedade

Brasileira

de

Anestesiologia.

Este

´

e

um

artigo

Open

Access

sob

uma

licenc¸a

CC

BY-NC-ND

(http://creativecommons.org/

licenses/by-nc-nd/4.0/

).

Introduction

YouTube

(YouTube

©

www.youtube.com,

YouTubeLLC,

San

Bruno,

USA)

as

a

referential

visual

guide

is

one

of

the

most

popular

digital

information

sources

currently

available,

with

more

than

4

billion

videos

watched

every

day.

The

health-

care

social

media

listings

of

the

Mayo

Clinic

name

>700

health-related

organizations

in

the

United

States

of

Amer-

ica

alone

that

have

a

presence

on

YouTube.

Some

of

the

videos

were

prepared

for

healthcare

providers

as

an

educa-

tional

visual

guide

for

new

interventions.

1

However,

there

are

no

regulations

or

standards

with

respect

to

the

educa-

tional

aspects

of

the

videos

available

on

YouTube.

Misleading

information

has

been

shared

on

YouTube

which

could

pose

risk

to

healthcare

professionals

or

their

patients.

2

On

the

other

hand,

E-Learning

methods

such

as

video

recordings

have

become

an

important

part

of

medical

education.

3 --- 6

It

has

been

proven

in

many

studies

that

learning

through

visual

sources

has

many

advantages

over

conventional

didac-

tic

training

methods

for

both

medical

students

and

in

other

areas

of

medical

training

including

regional

anesthesia.

7

Especially,

in

regional

anesthesiology

education

and

training,

there

is

value

in

offering

clear

visual

guidance

to

clarify

the

complex

interactions

of

anatomy,

manual

skills,

physiology,

and

clinical

judgment.

Several

regional

anesthesia

institutions

regularly

publish

comprehensive

educational

multimedia

materials

for

such

advanced

inter-

ventions

however;

such

proprietary

materials

may

not

achieve

the

dissemination

impact

of

YouTube.

Increas-

ingly,

YouTube

is

being

recognized

as

a

potentially

useful

source

of

healthcare

education

and

training

material.

8

On

YouTube

one

may

easily

reach

information

on

a

complex

idea

relayed

through

a

basic

visual

format.

However,

it

is

currently

possible

for

accurate

information

to

be

displayed

on

YouTube

in

a

way

that

is

disorganized,

disjointed

or

even

misleading.

1

Until

today,

videos

of

neuroxial

block

techniques

and

lumbar

punctures

on

YouTube

have

been

assessed

regarding

patient

safety,

consistency

with

a

scientific

approach,

and

their

quality.

9,10

The

aim

of

this

study

was

to

evaluate

YouTube

videos

relevant

to

performance

and

preparation

of

brachial

plexus

nerve

blocks,

using

ASRA

guidelines

and

Miller’s

Anesthesia

as

reference

texts.

11,12

Methods

Video

selection

BPNB

procedures

were

selected

as

the

target

group

for

the

YouTube

videos

to

be

evaluated

with

the

concensus

170

O.

Selvi

et

al.

from

the

authors.

The

YouTube

search

for

videos

of

axil-

lary,

infraclavicular,

interscalene

and

supraclavicular

nerve

blocks,

which

are

extensively

used

for

shoulder,

arm

and

hand

surgeries,

was

completed

on

06.10.2017

by

the

main

investigator,

an

anesthesiologist

with

8

years

experience

in

anesthesia.

The

selected

keywords

were

‘‘interscalene

block

ultrasound’’,

‘‘interscalene

block

nerve

stimulator’’,

‘‘supraclavicular

block

ultrasound’’,

‘‘supraclavicular

block

nerve

stimulator’’,

‘‘infraclavicular

block

ultrasound’’,

‘‘infraclavicular

block

nerve

stimulator’’,

‘‘axillary

block

ultrasound’’,

‘‘axillary

block

nerve

stimulator’’.

Establishment

of

assessor

team

One

consultant

anesthesiologist

and

three

experienced

anesthesiologists

with

more

than

7

years’

experience

in

anesthesia

were

selected

as

the

assessor

team.

All

of

them

can

safely

practice

the

blocks

mentioned

above

in

their

daily

routine

and

also

teach

them

as

part

of

a

trainee

education

program.

Tw o

of

them

had

of

Turkish

Regional

Anesthe-

sia

Society

ultrasound

guided

regional

anesthesia

training

certificates.

The

main

investigator,

as

team

leader,

orga-

nized

a

work

shop.

In

this

workshop

random

lower

extremity

regional

anesthesia

videos

were

selected

from

YouTube

and

assessors

were

asked

to

score

the

videos

in

a

form

which

was

specifically

prepared

for

data

entry.

Information

gathered

during

the

workshop

was

used

to

determine

any

ambiguous

points

about

data

recording,

scoring

system

and

exclusion

criteria,

leading

to

clarification

of

the

study

objectives.

Any

disputed

topics

related

to

these

elements

were

determined

through

consensus.

Watch

list

The

main

investigator

prescreened

the

first

100

recent

and

popular

videos

to

create

a

‘‘watch

list’’

regarding

each

key-

word

while

further

pages

were

not

included

in

the

search

due

to

the

frequency

of

obsolete,

irrelevant

or

repeated

material.

The

search

was

limited

to

the

first

100

videos

as

it

was

not

the

aim

of

the

investigators

to

evaluate

all

videos,

but

only

those

which

would

have

the

highest

possi-

bility

of

being

viewed.

Due

to

the

YouTube

search

algorithm,

results

of

the

search

were

listed

in

a

single

session

before

any

changes

occurred

in

viewer

count

which

automatically

alters

the

rank

of

the

video

in

the

display

list

and

its

popu-

larity.

Uniform

Resource

Locator

(URL)

addresses

of

videos

were

copied

into

an

excel

sheet

to

form

the

watch

list.

The

final

list

was

overviewed

by

4

assessors

and

a

consensus

was

established

on

the

final

list

according

to

the

exclusion

crite-

ria.

Videos

in

languages

other

than

English,

with

unrelated

content,

or

that

were

shorter

than

1

minute

were

excluded.

Video

lasting

longer

than

15

minutes

were

excluded

because

the

length

of

the

video

may

jeopardize

the

accuracy

of

the

assessment

due

to

the

length.

Questionnaire

1

Q1

had

18

questions

about

the

technique

itself

regarding

safety,

hygiene,

anatomy,

landmarks,

complications,

local

anesthetic

use,

and

equipment.

Each

assessor

gave

a

score

to

each

question

grading

from

1

to

5

(1

being

very

bad

and

5

being

excellent).

Question

10

was

asked

to

determine

nerve-stimulator

usage

and

question

11

and

12

were

exam-

ined

only

for

sole

nerve-stimulator

guided

videos.

In

Q1

the

last

six

questions

were

only

answered

for

ultrasound-guided

blocks

and

they

were

related

to

ultrasound

image,

needle

manipulations,

and

sonographic

image

interpretation.

Ques-

tionnaire

One

(Q1

---

Table

1)

was

prepared

according

to

the

Delphi

method

based

on

the

standard

procedure

definitions

taken

from

the

American

Society

of

Regional

Anesthesia,

and

Miller’s

Anesthesia

textbook.

11,12

The

four

assessors

filled

Q1

for

each

video

individually.

Questionnaire

2

Questionnaire

two

(Q2

---

Table

2),

which

included

14

items

regarding

preparation

and

generic

video

quality

of

each

video,

was

completed

individually.

In

this

second

part,

inves-

tigators

evaluated

the

videos

according

to

the

Guidelines

for

the

Preparation

and

Evaluation

of

Video

Career

Media

by

the

American

National

Career

Development

Association

(NCDA).

13

The

videos

were

marked

from

0

to

5

(where

0

does

not

apply,

1

is

unsatisfactory,

2

is

poor,

3

is

satisfactory,

4

is

good,

and

5

is

outstanding).

The

final

scores

of

each

video

were

averaged

and

grouped

as

follows:

0---13:

unsatisfac-

tory;

14---27:

poor;

28---41:

satisfactory;

42---54:

good;

56---70:

outstanding.

Final

evaluation

meeting

for

disputed

videos

A

consensus

score

was

reached

in

a

focus

group

meeting

for

those

videos

in

which

Fleiss’

Kappa

scores

<

0.20

in

Q1.

The

consultant

anesthesiologist

was

selected

as

moderator

during

the

meeting.

If

ambiguities

could

not

be

reconciled

over

a

video,

‘‘voting’’

was

used

to

reach

a

final

score

for

the

debated

videos.

Statistical

analyses

R

3.4.3

(R

Core

Team;

2017).

Was

used

for

all

data

cleaning,

analysis

and

visualization

processes

and

Inter

Rater

Reliabil-

ity

(IRR)

calculation.

14,15

Agreement

between

assessors

for

each

video

was

defined

according

to

Fleiss’

Kappa

scores:

no

agreement

(<0.20),

insignificant

agreement

(0.0---0.20),

moderate

agreement

(0.21---0.40),

most

part

agreement

(0.41---0.60),

significant

agreement

(0.61---0.80),

and

excel-

lent

agreement

(0.81---1.00).

Results

Video

selection

72

ultrasound-guided

and

14

nerve-stimulator

guided

block

videos,

a

total

of

86

videos

out

of

374

were

evaluated

in

YouTube.

A

total

of

288

videos

were

excluded

for

the

fol-

lowing

reasons:

8

non

English,

288

not

on-topic,

36

repeated

and

16

too

long/short.

The

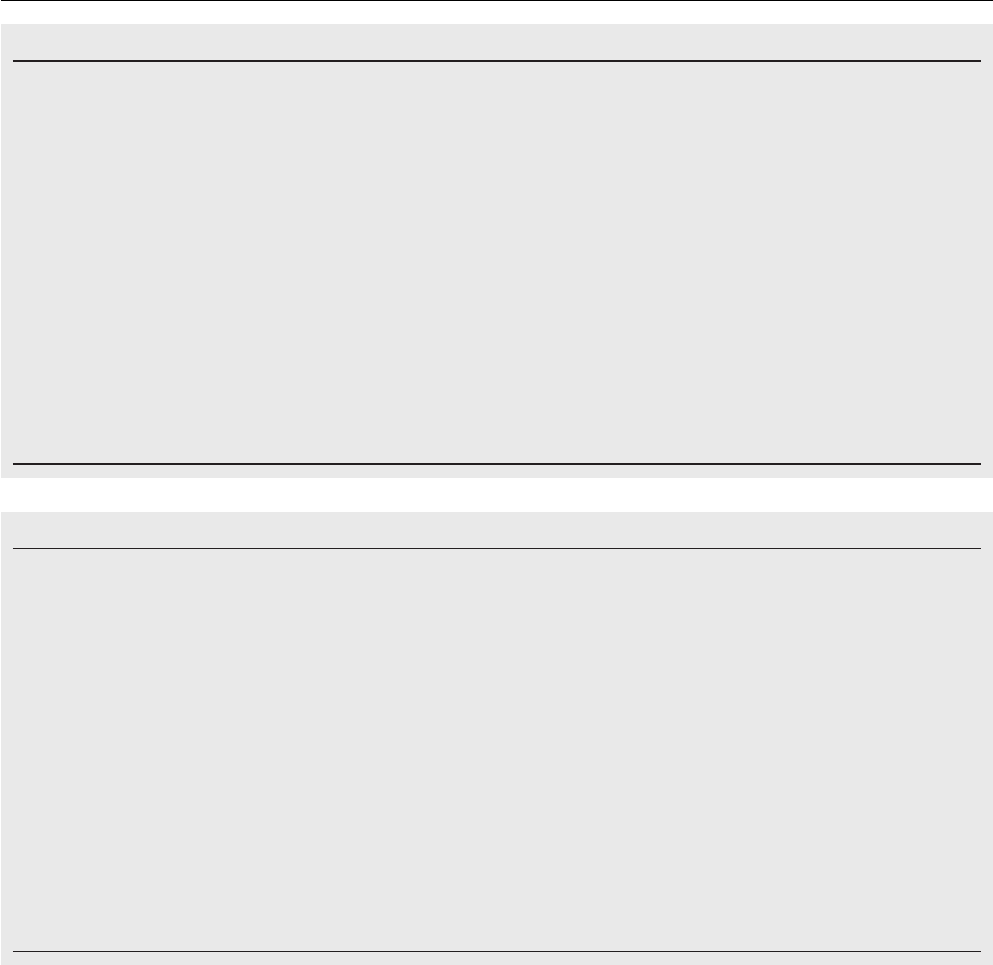

exclusion

criteria

and

numbers

for

the

whole

evaluation

process

can

be

seen

in

Table

3

and

Fig.

1.

Brachial

plexus

videos

in

YouTube

171

Table

1

Questionnaire

1.

Q1

In

which

kind

of

operation

is

this

block

applicable?

Was

this

information

clearly

explained?

Q2

Was

there

clear

explanation

of

the

targeted

skin

dermatomes

innervated

by

the

nerve?

Q3

Were

anatomical

landmarks

clearly

explained

or

marked?

Q4

Were

important

vessels

and

nerve

structures

in

close

relation

to

the

targeted

nerve

clearly

explained?

Q5

Were

possible

complications

related

to

this

block

technique

explained?

Q6

Was

the

information

on

sterilization

procedures

clearly

explained

or

emphasized?

Q7

Was

the

information

about

nerve

stimulator

device

and

needle

choice

clearly

explained?

Q8

Was

the

information

for

skin

local

anesthetic

infiltration

(volume,

name

of

medication)

clearly

explained?

Q9

Was

the

information

about

local

anesthetic

substance

clearly

explained?

Q10

Was

the

nerve

stimulator

used

in

this

block?

Q11

If

YES,

was

the

safe

threshold

level

for

electrical

impulses

clearly

explained?

Q12

If

YES,

were

the

muscle

twitches

regarding

the

stimulated

nerve

clearly

explained?

Q13

Were

the

sono-anatomic

image

recording

and

anatomical

structures

in

the

recording

clear

and

easy

to

perceive?

Q14

Was

the

ultrasound

image

of

the

needle

visible

and

easy

to

follow?

Q15

Were

the

instructions

for

depth,

alignment

and

direction

movements

of

the

needle

clearly

explained?

Q16

Was

the

technical

information

for

probe

selection

and

frequency

regarding

the

ultrasound

device

explained?

Q17

Was

the

information

about

in-plane

or

out-plane

technique

presented

in

the

video?

Q18

Was

the

information

about

the

local

anesthetic

spread

explained?

Table

2

Questionnaire

2.

Q1

Was

the

aim

of

video

clearly

stated

and

was

it

explained

in

the

first

quarter

of

the

video?

Q2

Did

the

title

or

name

of

the

video

match

the

aim

of

the

video?

Q3

Were

the

design

and

the

content

of

the

video

suitable

for

a

targeted

educational

aim?

Q4

Were

the

skills

and

the

technique

of

the

procedure

explained

using

a

standard,

comparable

and

‘‘step

by

step’’

method?

Q5

Was

the

information

given

in

the

video

useful

for

viewers

to

develop/enhance

their

skill

base?

Q6

Was

the

content

of

the

video

appropriate

for

the

health

and

safety

of

both

the

patient

and

the

practitioner?

Q7

Was

the

quality

of

picture

regarding

colors

and

clarity

acceptable?

Q8

Was

the

quality

of

video

sound

acceptable?

(No

sound

should

be

scored

as

zero)

Q9

Was

the

length

of

the

video

in

balance

with

the

content

of

the

video?

Q10

Was

the

information

on

the

date

of

production

or

release,

producers

and

the

references

clearly

explained?

Q11

Were

objectives,

learning

tasks

and

terminology

clearly

stated

in

the

video

enabling

viewers

to

address

those

tasks?

Q12

Did

the

video

have

stop-and-discuss

points,

additional

aids

such

as

scripts

and/or

summarized

information

on

procedure?

Q13

Was

any

information

given

on

a

way

to

evaluate

the

effectiveness

and

reproducibility

of

the

video?

Q14

Did

the

content

of

the

video

stimulate

viewers

to

make

the

transition

from

passive

viewer

to

active

practitioner

in

the

application

of

the

technique?

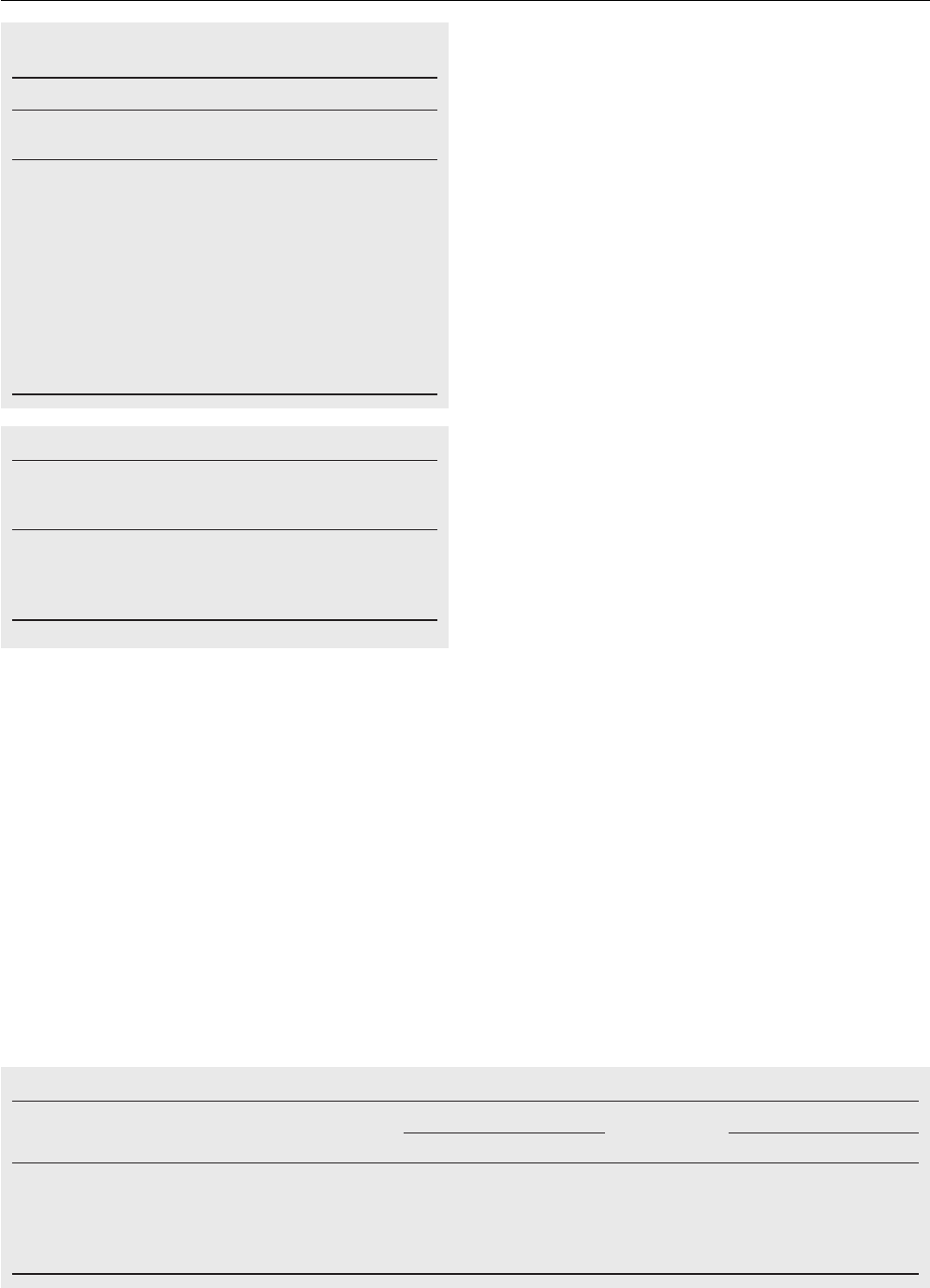

Results

of

Q1

The

final

Q1

scores

for

14

PNS

video

and

72

USG

guided

videos

are

presented

in

Figs.

2

and

3

respectively.

As

can

be

seen

in

the

heat

map

graphics,

the

first

videos

in

every

watch

list

of

each

block

technique

had

greater

scores

than

subsequent

videos

and

the

last

videos

of

the

watch

lists

had

the

lower

scores.

When

we

evaluate

the

average

scores

for

each

ques-

tion

in

Q1,

in

ultrasound-guided

videos

the

least

score

was

1.38

(median

=

3;

25th

percentile

=

1;

75th

per-

centile

=

1)

in

Q1---05

related

to

the

information

on

possible

complications,

and

the

greatest

value

was

3.30

(median

=

3,

25th

percentile

=

2,

75th

percentile

=

5)

in

Q1---13

related

to

sono-anatomy

(Table

4).

For

the

videos

with

nerve

stimulator,

the

lowest

score

was

1.64

(median

=

1;

25th

percentile

=

1;

75th

percentile

=

2)

in

Q1---07,

which

was

related

to

the

nerve

stimulator

device

and

needle

choice,

and

the

highest

was

3.60

(median

=

4,

25th

percentile

=

3,

75th

percentile

=

5)

in

Q1---12

related

to

the

information

on

muscle

twitches

(Table

5).

According

to

the

result

of

question

10

in

Q1,

which

eval-

uates

nerve

stimulator

usage,

in

11

of

72

Ultrasound-guided

videos

nerve-stimulator

was

combined

with

ultrasound

to

demonstrate

muscle

twitches,

however

only

4

videos

had

information

about

lowest

safe

threshold

for

electri-

cal

impulses.

A

total

of

26

videos

were

accompanied

by

a

‘‘written

only’’

explanation

and

seven

videos

provided

no

description

(written

or

verbal)

of

the

procedure

being

undertaken.

Regarding

the

ultrasound-guided

videos,

36

sonographic

recordings

of

successful

interventions

were

shared

and

3

videos

were

animation.

Only

17

videos

referred

to

whether

the

block

was

performed

using

an

out-of-plane

or

in-plane

technique.

172

O.

Selvi

et

al.

Table

3

Video

selection.

Total

number

of

videos

Non-English

Off-Topic

Too

long/

short

Repeated

videos

Selected

videos

Interscalene

B.

(Nerve

Stimulator

guided)

31

1

25

1

1

3

Interscalene

B.

(Ultrasound

guided)

41

2

13

4

1

21

Supraclavicular

B.

(Nerve

Stimulator

guided)

34

1

28

0

1

4

Supraclavicular

B.

(Ultrasound

guided)

65

3

37

2

3

20

Infraclavicular

B.

(Nerve

Stimulator

guided)

37

1

27

1

7

1

Infraclavicular

B.

(Ultrasound

guided)

60

0

29

1

15

15

Axillary

B.

(Nerve

Stimulator

guided)

20

0

13

1

0

6

Axillary

B.

(Ultrasound

guided)

86

0

56

6

8

16

B.,

block.

Selection of brachial

plexus bloc

ks

Selection of the

final watching list of

the videos

Final videos n:86

Data analysis

Final e

valuation

meeting for

debated videos

n:21

n:20

n:15

n:16n:6n:1n:4

28 excluded

Interscalene

block videos

n:31

Interscalene

block videos

n:41

Supraclavicular

block videos

n:34

Supraclavicular

block videos

n:65

Infraclavicular

block videos

n:37

Axillary ner

ve

block videos

n:20

Axillary ner

ve

block videos

n:86

Infraclavicular

block videos

n:60

30 excluded

36 excluded

14 excluded

20 excluded

45 excluded

45 excluded

70 excluded

n:3

Nerve stimulator guided

Ultrasound guided

Assessors team:1 consultant and 3 experienced anesthesiologist

06.10.2017 search f

or

videos with key words

in youTube

Total 374 videos

Figure

1

Flow

chart

of

the

study.

In

Q1

Ultrasound-guided

interscalene

videos

had

higher

scores

compared

to

the

other

ultrasound-guided

blocks.

Ultrasound-guided

axillary

blocks

had

lowest

scores

in

Q1.

Videos

of

axillary

blocks

with

nerve

stimulator

had

highest

scores

however,

supraclavicular

blocks

with

nerve

stimulator

had

the

lowest

average

scores

in

Q1

(Table

6).

IRR

was

examined

for

Q1,

Kappa

score

was

between

0.81

and

1.00

for

28

videos

(excellent

agreement),

0.61---0.80

(significant

agreement)

for

8

videos,

0.41---0.60

(most

part

agreement)

for

32

videos

and

0.21---0.40

(moderate

agree-

ment)

for

18

videos.

The

greatest

and

least

Kappa

scores

were

1.00

and

0.23

respectively

for

Q1.

Ten

videos

were

Brachial

plexus

videos

in

YouTube

173

12

11

09

08

07

06

05

04

03

02

01

12 13 1410 110908070605

Video

Question

Average score

Videos 1-3 Interscalane, 4-7 Supraclavicular, 8 Infraklavicular, 9-14 Axillary

0

1

2

3

4

5

04030201

Figure

2

Distribution

of

scores

for

the

brachial

plexus

nerve

block

videos

performed

with

nerve

stimulator.

scored

as

‘unsatisfactory’

for

all

questions

resulting

in

Kappa

scores

of

1.0

for

these

videos.

Results

of

Q2

The

results

of

Q2,

which

reflects

the

preparation

and

generic

video

quality

of

videos

as

educational

material,

can

be

seen

Table

4

Question

based

evaluation

of

the

scores

for

ultra-

sound

guided

brachial

plexus

block

videos

in

Q1.

USG

video

scores

by

question

Question

25th

percentile

Mean

Median

75th

percentile

Q13

2

3.3

3

5

Q04

2

3.03

3

5

Q03

1

2.7

3

4

Q14

1

2.7 3

4

Q18

1

2.6 2

4

Q15

1

2.6 2

4

Q17

1

2.4

2

4

Q01

1

1.9

1

2

Q16

1

1.8

1

2

Q08

1

1.8

1

2

Q07

1

1.6 1

2

Q06

1

1.6 1

1

Q02

1

1.5 1

1

Q09

1

1.4 1

1

Q05

1

1.4 1

1

in

Table

7,

with

65.3%

of

Ultrasound-guided

videos

and

42.8%

of

blocks

with

nerve-stimulator

having

worse

than

satisfac-

tory

scores.

Only

5

Ultrasound-guided

videos

and

1

classical

nerve

block

video

had

outstanding

results.

Video

Average score

0

1

2

3

4

5

Question

Interscalene videos

Supraclavicular videos

Inf

raclavicular videos

Axillary videos

14

15

16

17

18

13

09

08

07

06

05

04

03

02

01

14

15

16

17

18

13

09

08

07

06

05

04

03

02

01

14

15

16

17

18

13

09

08

07

06

05

04

03

02

01

14

15

16

17

18

13

09

08

07

06

05

04

03

02

01

14 15 16 17 18 19 20 21

22

57

42 43 44 45 46 47 48

49

50

51 52

53 54 55 56

58

59 60 61 62 63

64

65 66 67 68 69

70

71 72

23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41

1309 10 11 120807060504030201

Figure

3

Distribution

of

scores

for

the

ultrasound

guided

brachial

plexus

nerve

block

videos.

174

O.

Selvi

et

al.

Table

5

Question

based

evaluation

of

the

scores

for

nerve

stimulator

guided

brachial

plexus

block

videos

in

Q1.

NS

video

scores

by

question

Question

25th

percentile

Mean

Median

75th

percentile

Q12

3

3.6

4

5

Q03

2

3.1 3

4

Q11

2

3.1 3

4

Q01

1

2.5 2

4

Q08

1

2.4

3

3

Q02

1

2.3

2

4

Q09

1

2.3

2

3.25

Q04

1

2.3

2

3.25

Q06

1

2.0

2

2

Q05

1

1.6

1

2

Q07

1

1.6

1

2

Table

6

Mean

scores

of

the

videos

in

Q1.

Mean

score

for

nerve

stimulator

videos

Mean

score

for

ultrasound

videos

Axillary

B.

2.57

1.84

Infraclavicular

B.

3.11

1.86

Interscalene

B.

2.81

2.51

Supraclavicular

B.

1.82

2.25

B.,

blocks.

Discussion

Since

the

core

aspect

of

ultrasound-guided

nerve

block

education

is

‘‘visual’’

in

nature,

using

videos

for

regional

anesthesia

education

can

be

crucially

beneficial

for

trainees.

How

to

produce

reliable

visual

materials

and

incorporate

them

into

regional

anesthesia

education

is,

in

itself,

an

important

issue

for

medical

practice.

Therefore,

there

do

exist

regional

anesthesia

videos

which

have

been

created

by

professional

institutions

with

high

accuracy,

quality

and

credibility

for

use

in

regional

anesthesia

train-

ing,

requiring

extensive

planning

and

careful

execution.

As

a

complementary

part

of

this

visual

guidance

recording

regional

anesthesia

interventions

and

using

them

to

recre-

ate

visual

feedback

videos

can

also

be

extremely

useful

for

self-assessments

in

regional

anesthesia

education.

Even

the

qualified

residents

in

regional

anesthesia

can

use

any

of

these

videos

as

a

source

of

information

when

they

lose

familiarity

with

a

specific

regional

anesthesia

block

tech-

nique

but

still

have

to

proceed

with

the

technique.

7,8,16---18

According

to

the

results

of

the

current

study,

the

most

rated

videos

were

prepared

by

professional

institutions,

and

in

these

videos

the

sonographic

image

and

position-

ing

of

ultrasound

probe

shared

the

same

screen

so

that

the

viewer

can

observe

the

relationship

between

the

align-

ment,

tilting,

pressure

and

rotation

maneuvers

related

to

the

USG

view.

These

most

highly

rated

videos

included

many

of

the

aspects

recommended

by

the

new

basic

simulation

assessment

tool.

This

tool

promised

to

improve

the

nec-

essary

skills

for

successful

Needle

Insertion

Accuracy

(NIA)

for

ultrasound-guided

nerve

blocks,

such

as

‘‘approach’’,

‘‘alignment’’,

‘‘movement’’,

‘‘location’’

and

‘‘targeting’’.

All

these

skills

require

dimensional

thinking,

interpretation

of

a

two

dimensional

screen

and

hand---eye

coordination.

Basically

this

new

visual

and

video

based

regional

anes-

thesia

training

device

emphasizes

the

value

of

screening

both

the

hand

position

and

the

ultrasound

image

simulta-

neously

on

the

same

screen.

7

Thus,

while

creating

visual

educational

materials

for

ultrasound-guided

regional

anes-

thesia

blocks,

this

technical

approach

can

empower

the

value

of

the

videos.

These

videos

which

are

systematic

in

their

approach

need

to

demonstrate

target

nerve

location,

direction

of

needle

toward

the

target

nerve

and

the

correct

method

of

administering

the

local

anesthetic

to

the

target

area.

Regulations

over

patient

safety

and

quality

standards

have

brought

new

issues

for

regional

anesthesia

education.

19

To

overcome

these

difficulties

in

regional

block

education,

simulation

based

training

methods

have

been

described

in

recent

years

which

allow

trainees

to

gain

skills

such

as

rotation,

alignment,

tilting

and

targeting

before

they

practice

on

real

patients.

20

It

has

been

shown

that

these

sonographic

skills

were

performed

more

successfully

by

anesthesia

trainees

when

they

were

provided

with

expert-

guided

feedback.

21

Although

we

do

not

recommend

YouTube

videos

as

a

learning

tool

as

per

our

findings,

publishing

recor-

dings

of

these

modern

regional

anesthesia

training

videos

together

with

explanatory

feedback

points

may

help

watch-

ers

to

understand

the

steps

and

errors

made

by

trainees.

These

videos

when

made

by

reputable

medical

institutes

might

be

quite

beneficial

for

regional

anesthesia

trainees

or

residents.

They

may

refresh

their

knowledge

regarding

ultrasound-guided

nerve

blocks

simply

by

watching

easily

available

YouTube

videos.

Addition

to

this,

institutes

and

their

followers

can

easily

meet

in

YouTube’s

social

platform

with

the

help

of

these

high-quality

education

videos.

Thus,

Table

7

Preparation

and

generic

video

quality

of

the

videos

(Q2).

Evaluation

Score

USG

videos

NS

videos

n

Percent

n

Percent

Unsatisfactory

0---13

19

26.4

1

7.1

Poor

14---27

28

38.9

5

35.7

Satisfactory

28---41

11

15.3

5

35.7

Good

42---54

9

12.5

2

14.3

Outstanding

56---70

5

6.9

1

7.1

Brachial

plexus

videos

in

YouTube

175

regional

anesthesia

applicants

may

meet

and

interact

in

an

alternative

widely-used

platform

to

share

and

expand

their

experiences.

The

safety

standard

of

procedure

application

in

the

videos

was

ambiguous

too.

In

ultrasound-guided

videos

the

lowest

average

score

was

in

question

5

which

asks

the

level

of

information

on

possible

complications

of

the

blocks,

and

this

fact

downgrades

the

reliability

of

videos

in

terms

of

safety.

Among

these

same

videos,

19

were

without

a

sound

recording,

relying

on

written

instructions

and

signs

to

deliver

the

presentation

and

therefore

failing

to

give

adequate

information

on

equipment,

preparation,

drug

doses,

patient

positioning,

and

sterilization.

They

are

a

contingent

part

of

the

apprenticeship

style

training

method,

a

type

of

educa-

tional

approach

that

can

be

found

responsible

to

prevent

regional

anesthesia

residents

from

receiving

standardized

education.

19

Unfortunately,

short

videos

were

unable

to

score

well

on

a

majority

of

Q1

and

Q2

questions.

Only

6

of

a

total

of

86

videos

got

outstanding

quality

scores

and

the

length

of

these

videos

were

over

seven

minutes.

Therefore,

it

can

be

postulated

that

any

visual

education

material

prepared

for

regional

anesthesia

education

should

not

be

too

short.

Although

some

short

videos

contain

valuable

information

and

tips,

they

are

often

difficult

to

understand

for

some-

one

who

is

new

to

the

technique.

Furthermore,

in

similar

future

studies

based

on

visual

material

analysis,

a

longer

‘‘minimum

time

limit’’

can

be

applied

as

exclusion

criteria

depending

on

the

targeted

outcome.

The

final

questions

of

the

Q2

assessment,

regarding

whether

or

not

the

videos

possessed

the

necessary

detail

for

accurate

re-enactment

of

the

procedure

in

a

clinical

set-

ting,

received

the

lowest

scores

from

the

assessors.

Only

two

videos

received

full

points

from

Q2.

Again

this

confirms

that

YouTube

videos

have

poor

overall

preparation

and

could

be

inadequate

as

refresher

material

for

the

selected

four

upper

body

peripheral

nerve

block

interventions.

According

to

the

results

of

this

study,

there

were

five

times

more

ultrasound-guided

BPNB

videos

on

YouTube

than

those

proceeding

with

conventional

nerve

stimulators

among

the

videos

examined

for

this

study.

This

shows

the

increasing

tendency

for

performing

ultrasound

guided

nerve

blocks

and

also

indicates

the

level

of

demand

for

them

as

learning/sharing

tools

on

social

platforms.

Health

institu-

tions,

universities

or

regional

anesthesia

associations

may

contribute

to

the

spread

of

more

accountable

and

credible

videos

on

YouTube

for

regional

anesthesia

which

priori-

tize

patient

safety

over

commercial

concerns,

such

that

commercial

institutions

and

individual

health

providers

may

be

inspired

by

the

setup

of

these

videos

to

reproduce

similar

high

quality

videos.

Thus

the

overall

quality

of

regional

anes-

thesia

videos

on

YouTube,

a

platform

of

great

public

impact

and

popularity,

may

provide

more

accurate

and

trustworthy

information.

Limitations

The

limitations

of

this

study

should

be

considered

while

reviewing

our

data.

First,

each

assessor

viewed

the

selected

videos

in

the

same

order

according

to

the

pre-determined

watch

list

---

ideally

the

assessors

could

have

reviewed

the

videos

in

random

order.

Although

assessors

watched

videos

independently,

video

randomization

would

have

prevented

the

repeated

effect

of

one

video

on

the

judgment

of

the

next

from

distorting

results.

Finally,

we

did

not

examine

num-

bers

of

viewers

for

each

video,

which

might

be

seen

as

a

good

predictor

for

the

quality

and

‘‘disseminative

impact’’

of

the

video.

22

However,

former

studies

have

revealed

no

correlation

between

the

quality

of

videos

and

views/month

and

this

fact

dissuaded

us

from

analyzing

data

on

viewer

counts.

6,9

Conclusion

The

utility,

scientific

rigor

and

accountability

of

BPNB

videos

in

YouTube

does

not

correlate

with

YouTube’s

modernity

and

mass

availability.

The

majority

of

the

videos

lack

the

sys-

tematic

approach

necessary

to

safely

guide

someone

seeking

information

about

the

BPNB

examined

for

this

study.

If

pro-

fessional

institutions

and

universities

publish

more

videos

with

predefined

competencies

on

social

media

platforms

like

YouTube,

they

may

present

a

good

example

for

safe

and

successful

interventions.

Conflicts

of

interest

The

authors

declare

no

conflicts

of

interest.

Acknowledgments

The

authors

would

like

to

thank

Dr.

George

Shorten,

member

of

Department

of

Anesthesiology

and

Intensive

Care,

Uni-

versity

Collage

Cork,

for

his

valuable

contributions

to

this

study.

References

1.

Knight

E,

Intzandt

B,

MacDougall

A,

et

al.

Information

seeking

in

social

media:

a

review

of

youtube

for

sedentary

behavior

content.

Interact

J

Med

Res.

2015;4:e3.

2.

Azer

SA,

Algrain

HA,

AlKhelaif

RA,

et

al.

Evaluation

of

the

edu-

cational

value

of

YouTube

videos

about

physical

examination

of

the

cardiovascular

and

respiratory

systems.

J

Med

Internet

Res.

2013;15:e241.

3.

Romanov

K,

Nevgi

A.

Do

medical

students

watch

video

clips

in

eLearning

and

do

these

facilitate

learning?

Med

Teach.

2007;29:484---8.

4.

Mathis

S,

Schlafer

O,

Abram

J,

et

al.

Anesthesia

for

medi-

cal

students:

a

brief

guide

to

practical

anesthesia

in

adults

with

a

web-based

video

illustration.

Anaesthesist.

2016;65:

929---39.

5.

DelSignore

LA,

Wolbrink

TA,

Zurakowski

D,

et

al.

Test-enhanced

E-learning

strategies

in

postgraduate

medical

education:

a

ran-

domized

cohort

study.

J

Med

Internet

Res.

2016;18:e299.

6.

Tackett

S,

Slinn

K,

Marshall

T,

et

al.

Medical

education

videos

for

the

world:

an

analysis

of

viewing

patterns

for

a

YouTube

channel.

Acad

Med.

2018;93:1150---6.

7.

Das

Adhikary

S,

Karanzalis

D,

Liu

WR,

et

al.

A

prospec-

tive

randomized

study

to

evaluate

a

new

learning

tool

for

ultrasound-guided

regional

anesthesia.

Pain

Med.

2017;18:

856---65.

8.

Godwin

HT,

Khan

M,

Yellowlees

P.

The

educational

potential

of

YouTube.

Acad

Psychiatry.

2017;41:823---7.

176

O.

Selvi

et

al.

9.

Tulgar

S,

Selvi

O,

Serifsoy

TE,

et

al.

YouTube

as

an

infor-

mation

source

of

spinal

anesthesia,

epidural

anesthesia

and

combined

spinal

and

epidural

anesthesia.

Rev

Bras

Anestesiol.

2017;67:493---9.

10.

Rössler

B,

Lahner

D,

Schebesta

K,

et

al.

Medical

information

on

the

Internet:

quality

assessment

of

lumbar

puncture

and

neuroaxial

block

techniques

on

YouTube.

Clin

Neurol

Neurosurg.

2012;114:655---8.

11.

Americana

Society

of

Regional

Anesthesia

and

Pain

Medicine.

Available

at:

https://www.asra.com/pain-

resource/category/9/upper-extremity-blocks.

12.

Miller

RD.

Miller’s

anesthesia.

7th

ed.

Philadelphia:

Else-

vier/Churchill

Livingstone;

2010.

p.

1639---703.

13.

National

Career

Development

Association

(NCDA)

Guide-

lines

for

the

Preparation

and

Evaluation

of

Video

Career

Media.

Available

at:

https://www.ncda.org/aws/NCDA/

asset

manager/get

file/3401

[accessed

17

May

2017].

14.

R

Core

Team

(2017).

R:

A

language

and

environment

for

statisti-

cal

computing.

R

Foundation

for

Statistical

Computing,

Vienna,

Austria.

https://www.R-project.org/

[accessed

3

July

2017].

15.

Matthias

Gamer,

Jim

Lemon

and

Ian

Fellows

Puspendra

Singh

(2012).

irr:

Various

Coefficients

of

Interrater

Reliability

and

Agreement.

R

package

version

0.84.

https://CRAN.R-project.

org/package=irr

[accessed

3

July

2017].

16.

Pedersen

K,

Moeller

MH,

Paltved

C,

et

al.

Students’

learning

experiences

from

didactic

teaching

sessions

including

patient

case

examples

as

either

text

or

video:

a

qualitative

study.

Acad

Psychiatry.

2018;42:622---9.

17.

Sainsbury

JE,

Telgarsky

B,

Parotto

M,

et

al.

The

effect

of

ver-

bal

and

video

feedback

on

learning

direct

laryngoscopy

among

novice

laryngoscopists:

a

randomized

pilot

study.

Can

J

Anaesth.

2017;64:252---9.

18.

Beard

HR,

Marquez-Lara

AJ,

Hamid

KS.

Using

wearable

video

technology

to

build

a

point-of-view

surgical

education

library.

JAMA

Surg.

2016;151:771---2.

19.

Smith

HM,

Kopp

SL,

Jacob

AK,

et

al.

Designing

and

implementing

a

comprehensive

learner-centered

regional

anesthesia

curricu-

lum.

Reg

Anesth

Pain

Med.

2009;34:88---94.

20.

Adhikary

SD,

Hadzic

A,

McQuillan

PM.

Simulator

for

teaching

hand---eye

coordination

during

ultrasound-guided

regional

anes-

thesia.

Br

J

Anaesth.

2013;111:844---5.

21.

Ahmed

OMA,

Niessen

T,

O’Donnell

BD,

et

al.

The

effect

of

metrics-based

feedback

on

acquisition

of

sonographic

skills

rel-

evant

to

performance

of

ultrasound-guided

axillary

brachial

plexus

block.

Anesthesia.

2017;72:1117---24.

22.

Trueger

NS,

Thoma

B,

Hsu

CH,

et

al.

The

altmetric

score:

a

new

measure

for

article-level

dissemination

and

impact.

Ann

Emerg

Med.

2015;66:549---53.